Navigating the terrain of modern computing can feel fraught with complexity and potential missteps. Among these paths lies serverless architecture—a potent, innovative solution cloaked in a shroud of both excitement and apprehension. This guide provides a deep dive into the depths of serverless technology, unveiling its treasures as well as its traps. Get set to explore what serverless means, when it’s truly advantageous, and how you might sidestep pitfalls lurking beneath its shiny surface.

What is Serverless Architecture?

At first blush, “serverless” might seem like an odd term—after all, isn’t there always hardware involved somehow? Indeed there is, but in the context of “serverless”, we’re talking more about how the developer experiences the technology. Here’s an awesome test to see if a service is Serverless:

Serverless architecture refers to applications where the provision of servers and infrastructure management are handled automatically by cloud providers. It effectively allows developers to simply focus on writing code for individual functions (hence also referred to as Function-as-a-Service or FaaS) without being bogged down with concerns around infrastructure maintenance and scalability: that’s taken care of behind-the-scenes.

Perhaps the most recognizable face in this crowd is AWS Lambda—one among numerous solutions from giants such as Azure Functions or Google Cloud Functions. With their promise of event-driven execution and automatic scaling capabilities, using such services can feel like stepping into tomorrow’s tech today—but only if used wisely!

Serverless empowers developers by removing barriers related to infrastructure management—an enticing advantage —but it doesn’t come without challenges. The question remains: How do you keep your balance while riding this wave called ‘serverless’?

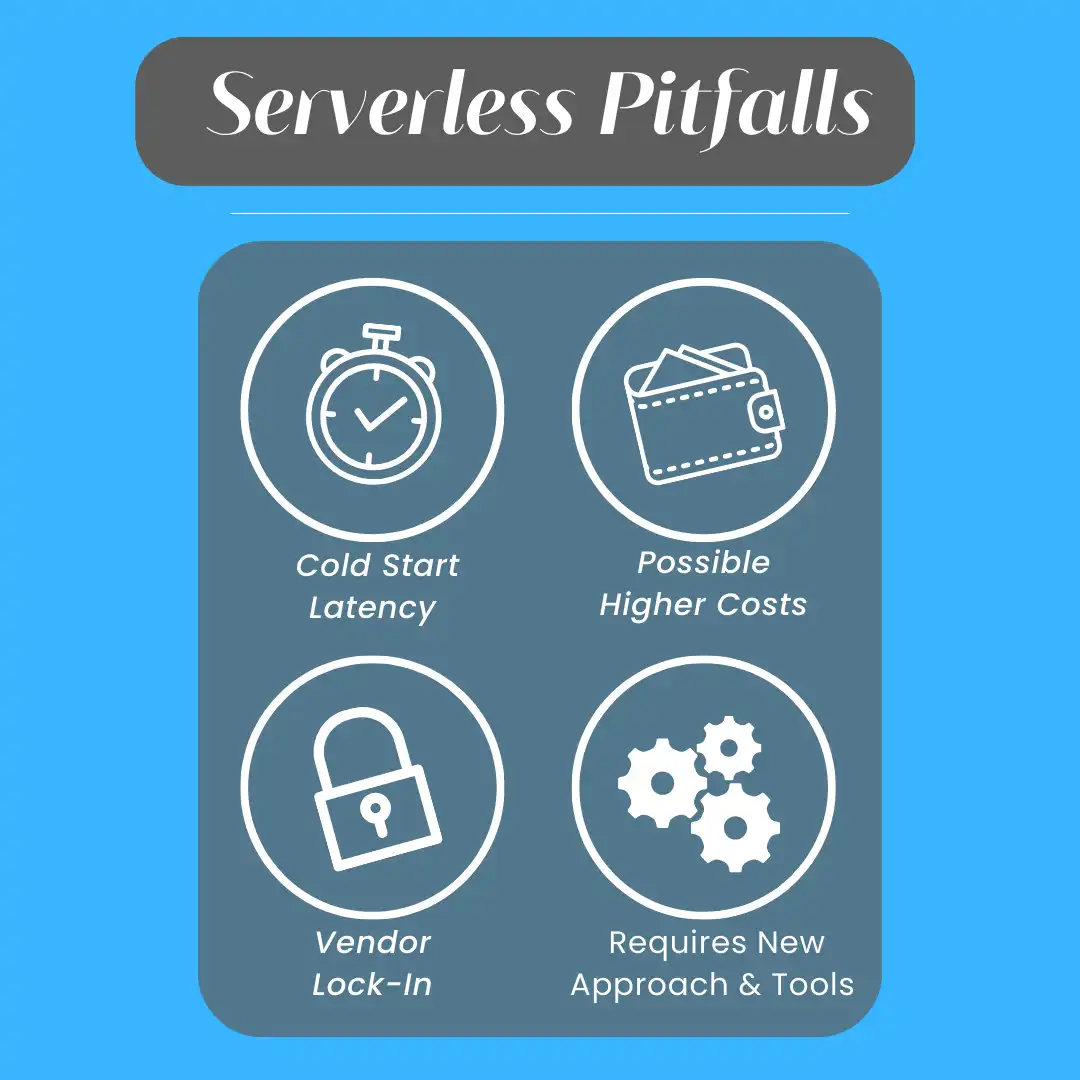

By understanding common complaints about serverless – high cost, cold start problem, vendor lock-in – along with associated security risks & performance issues; you’ll acquire the aptitude to discern when to avoid serverless architecture and alternative solutions to consider. Our end goal? To arm you with best practices that empower you to harness the potential of serverless confidently and competently!

Advantages of Serverless Architecture

Serverless architecture carries a plethora of benefits that spotlight its relevance in today’s ever-advancing technology world. You must, however, understand how to avoid serverless architecture pitfalls before reaping these advantages.

To begin with, in a serverless environment such as AWS Lambda, the burden of managing servers is reduced greatly. The vendor (AWS) handles system maintenance and capacity planning, granting developers more time to hone their creativity. They can focus primarily on coding and building applications without getting intensely tangled up in infrastructure management. This advantage fulfils the essence of the expression “work smarter, not harder”.

The next noteworthy benefit of serverless lies in its pricing policy. It operates under a ‘pay-as-you-go’ model which can be cost-effective for businesses — especially startups or medium-sized entities who are scaling up. With traditional architecture platforms, you generally pay for CPU time even when no active processing happens. But with serverless, you only incur costs based on your used compute time. This pricing arrangement both slashes operational expenses and maximizes efficiency.

Additionally, serverless architecture encourages an agile development process with fast application deployment. You can swiftly plug functionality into your app through microservices without going through extensive build-and-release cycles every so often. This also promotes a more agile approach, deploying small and often instead of the traditional waterfall approach.

This leads us to another invaluable merits – scalability and flexibility. As your user base grows or your traffic goes up and down during peak or off-peak times respectively, the platform naturally scales up or scales down as per demand ensuring optimal performance at all times.

Key Points:

- Server maintenance becomes a responsibility of the vendor.

- Pay-per-use principle can boost cost-effectiveness.

- Quick deployment assists agile development.

- Automatic scalability accommodates fluctuating traffic.

Remember though; despite being fascinatingly advantageous, there are still potential downsides related to costs and vendor lock-in amongst others which we’ll deliberate over subsequently. We need to really understand these issues so we can avoid the common pitfalls with serverless architecture and capitalize on its impeccable strengths.

Common Complaints about Serverless Architecture

While the serverless architecture certainly comes with its impressive advantages, like any technology, it is not devoid of challenges. Here are some common issues developers often encounter while working within serverless environments.

1: High Cost

You might imagine that a ‘server-less’ structure would excel in cost efficiency, and while this can be true for many small to medium-sized applications, there’s more than meets the eye. When the need arises for continuously running functions or at very high scale, costs can quickly elevate. The cost to run a lambda non-stop for an hour is usually 4-5x the price of an equivalent EC2 instance. Furthermore, services such as AWS Lambdas include additional charges for invocations and peripheral services which can inflate prices unexpectedly.

If you’re processing thousands of requests a second and running hundreds of Lambda functions concurrently, all of the time, it may be worth estimating your running costs if you move to server-based architecture.

The flip side of this is that companies often make the “Cost Optimisation” choice to move to EC2 before they have anywhere near the traffic level to justify it. They end up paying more for servers that are sat almost idle, then have to pay their team to manage and maintain these servers instead of working on the problem. They’d not considered the total cost of ownership.

2: Cold Start Problem

Developers frequently moan about what’s known in technical circles as the ‘cold start’ problem. This issue happens when you call a Lambda function but there aren’t any live for this function yet, or they’re all busy. In this case, AWS needs to create a new instance of your function, download your code onto the instance and then it can run your request. This extra work before processing the request is what people call cold starts. How many milliseconds of delay are added depends on a range of things, from the size of your function code, the language you are using and many other factors. The diagram below shows when and why cold starts happen. You can learn more about cold starts from one of my videos on Cold Starts.

Cold starts have become a lot less of an issue in the last few years. It used to be common to see cold starts over 1s but now, with the right optimisations and practices, you’ll have delays of 0.2-0.4s.

3: Vendor Lock-in

This one affects companies who decide they need to hop between different cloud providers. Serverless architectures have distinct setups custom tailored to specific provider’s environment (for instance AWS Lambda). So yes whilst portable on paper, it becomes a tough task when needing to migrate from one vendor to another or trying to design a solution that runs in multiple clouds.

The reasons behind vendor lock-in vary from case to case, but on closer inspection, talking about this with clients, prospects and architects from every sector of the tech world, I’ve found that in a lot of cases it’s based on irrational fears. Fear that AWS is going to double their prices (I’ve never seen any service even increase in price). Fear that AWS might close their account or even that AWS might go bust and leave them stuck without anywhere to run their software.

When these fears are addressed and the risk is compared against the risk of a competitor stealing their clients or a developer accidentally deleting the production database, then you are making an informed decision about vendor lock-in.

4: Requires a Different Approach and Different Tools

There is a paradigm shift involved in moving towards a serverless architecture. Both a conceptual shifts in how we approach designing software systems and practical changes in our development tool sets.

The solution design patterns that traditional architects will have relied upon for decades often become anti-patterns in a serverless application. The unique constraints and benefits of Serverless means that you need to change the patterns that you use. Serverless Architecture is also a lot more involved, as serverless architecture is a lot more closely coupled to application functionality.

Traditional monitoring tools may also turn out unhelpful here given that we’re no longer interacting directly with servers. Such abstractions make gaining visibility into execution flows uniquely challenging, thus highlighting the need for specialized monitoring solutions and a fresh approach to deployment pipelines.

It really is not as simple as just lifting your existing setup directly into serverless without any adaptation!

Security Risks of Serverless Architecture

Serverless architecture, despite its perks and efficiencies, isn’t without potential pitfalls. It’s vital to understand the inherent security risks tied to it, especially if you’re planning to implement or improve your implementation. Some of the most problematic security issues (OS vulnerabilities, network attacks) are handled by AWS, but there are some security risks more obvious in a serverless app.

1: Increased Attack Surface

The first factor you need to be aware of is what we refer to as an ‘Increased Attack Surface’. As serverless architecture tends to rely on a microservices model—where applications are broken down into smaller services—this leads to numerous services each with their own endpoints. This multiplicity can increase your system’s attack surface area, essentially providing more entry points for cyber hackers. Therefore, proper endpoint protection is imperative in serverless environments.

2: Security Misconfiguration

Secondly, we encounter ‘Security Misconfigurations’. These are often caused by human error during setup or updates. An inadvertent mistake—a misconfigured setting or permission—can enable an attacker access they wouldn’t typically have. Automation of deployment processes using Infrastructure as Code (IaC) tools like AWS CloudFormation can help minimize such oversights and maintain secure configurations.

The most common instances of this that I have seen is having public S3 buckets and forgetting to have any authorisation on your API endpoints. Both of these can expose data that should be secure, and also enable “denial of wallet” attacks – repeatedly calling your API or downloading files from S3 to increase your AWS costs.

3: Broken Authentication

Thirdly, ‘Broken Authentication’ poses another risk with serverless architecture. In essence, this refers to errors related to user verification mechanisms the system uses—when these fail; malicious entities could gain unauthorized access. Maintaining robust authentication processes is key here. AWS has services to handle this – AWS Cognito – but there are loads of other ways to secure your application endpoints.

One way to I like to reduced this risk is to have tests which attempt to call each of your endpoints without credentials or with invalid details.

4: Over Privileged Functions

Finally, something called ‘Over Privileged Functions’ might pose increased security concerns. This term means that certain parts of your serverless function have excessive permissions which might allow possible exploits due to privilege escalations attacks. Addressing this involves implementing the principle of least privilege (POLP), where each function has just enough permissions for it to fulfil its role effectively.

Using least privilege reduces the opportunities for malicious attacks, but also of accidental damage. Ever copied a database write command from somewhere else in the repo, only to forget to change the table you’re writing to – least privilege can save your arse.

The world of serverless holds unlimited promise and potential for speed and efficiency but doesn’t come devoid of challenges in return. Keep abreast of best practices and execute regular audits to ensure your system remains impregnable against these omnipresent security risks.

Performance Issues with Serverless Architecture

When implementing serverless architecture, it’s necessary to pay attention to potential performance issues. These can significantly impact the efficiency and overall operation of your applications.

1: Latency and Timeouts

A frequently flagged concern with serverless is latency – the delay before a transfer of data begins following an instruction for its transfer. The ‘cold start’ issue that we discussed before plays a significant role here, causing noticeably slower response times due to the time taken to initialise a function. Whilst cold starts may only happen 0.5% of the time, if you need to guarantee a specific response time, Lambda may not be the tool for you.

There are some other issues with serverless when building services that are very latency sensitive. Using API Gateway can add 100-200ms on top of your Lambda execution time.

Serverless platforms also impose timeouts on function executions. For AWS Lambda, the maximum execution duration per request is 900 seconds (or 15 minutes). Therefore, tasks not well-suited for short processing durations might experience problems while running in a serverless environment. There are other services that are designed for longer running or async tasks (Step functions, fargate) but depending on your exact requirements they may also be unsuitable.

2: Network Bandwidth and Throughput Limitations

Network bandwidth and throughput limitations may affect your application’s performance as well. If your application requires high network throughput or should handle large quantities of data simultaneously, you could be facing some hurdles, or elevated costs.

A common reason this happens in serverless architectures is that they are typically run within containers that have lower network capability than traditional servers or virtual machines.

3: Autoscaling Isn’t Infinite

Autoscaling, even though an attractive feature of serverless architecture, isn’t without its challenges. While most believe that autoscaling under serverless is infinite, there could be service level agreement (SLA) limits imposed by the vendor which often go unnoticed until demand surges unexpectedly.

With Lambda, for example, there is an initial autoscaling rate of 3000 (or 500 in smaller regions) and then a limit of 500 extra concurrent lambdas every minute. If you have huge and sudden spikes in traffic (half time in a sports game) then you may hit the Lambda scaling limits.

While autoscaling lets us handle increased loads automatically and without thought in most cases, there’s still a limit – we can’t simply scale infinitely.

4: AWS Service Limits

Speaking of limitations, AWS’s default service limits pose another potential challenge when dealing with high-performing applications within a serverless setup.

For example, AWS imposes a concurrent execution limit, limiting the number of simultaneous function executions. New AWS accounts have a default limit of 1000 executions across all functions within an account. This is a soft limit so you can request to have this increased, but there are some limits which are hard limits, such as the number of Lambda Layers you can attach to a single function or the limit of 10 concurrent DetectEntities jobs you can have in AWS Comprehend. If your architecture depends on these features then you’ll hit a point where your business can’t scale any more.

When to Avoid Using Serverless Architecture

Serverless architecture is certainly appealing in many ways. However, that doesn’t mean it fits every scenario. There are specific instances when traditional methods of computing might be a better option. You’ve got to understand the advantages and limitations of serverless systems and decide for your use case whether it’s a suitable and beneficial approach to take.

Here are some key factors that might point you away from Serverless:

1: Training AI or Machine Learning Models

Firstly, training AI can sometimes conflict with serverless setups. If your needs include sustaining high performance while handling massive data loads and complex calculations, serverless might not be the best fit on a cost basis. With known workloads like this you aren’t getting a lot of the benefits of Serverless, but still paying the price.

Therefore, companies working on projects involving big data or machine learning may want to think twice before going completely serverless. Traditional servers are often more cost effective when handling such extensive workloads. You might still choose to have Serverless as part of your architecture. Using Kinesis and Lambda to ingest and format large amounts of data can be incredibly effective, storing the prepared data into S3 where a traditional EC2-architecture can run the machine learning or computations.

One exception to this rule is when high performance computation is needed, but setting up and managing the infrastructure would delay the project. That was the case during Covid19, where research teams used Lambda for their modelling and machine learning. When they had designed a new model they could start the training on 3000 instances of 10GB Lambda functions all at the same time. This resulted in the scientists being able to get their results faster, and without having to set up and manage a huge cluster of servers.

2: At Very Large Scale

Secondly, at very large scale the infrastructure cost of using Serverless will be far higher than traditional architecture. At this point you can decide whether it is worth investing in an in-house operations team. If the total cost of ownership (infrastructure cost + operations team wages) is far lower than the serverless costs, it might be worth switching.

Additionally, having an in-house operations team means you can utilise containers and reduce ‘vendor lock-in’, giving you flexibility to shift between different technologies as needed without being reliant solely on a third-party provider’s capabilities or offerings.

This sounds perfect, but there might still be reasons to stick with Serverless. Developers are able to configure, deploy, monitor and test Serverless applications without needing to think about the underlying servers. Running a traditionally architected application, even with an operations team, can add work to your developers. Some companies have gone as far as to re-build their own version of Lambda in a ‘Private Serverless’ but this takes a lot of upfront investment and some incredibly smart DevTool engineers.

3: When You Have Specific Requirements that are Unsuitable for Serverless

Lastly, certain requirements simply don’t suit the serverless model due to their specific natures. For example:

- Long-running applications: Serverless functions operate under set time constraints and aren’t suitable for tasks needing extended periods of uninterruptible processing. There can be ways to do this serverlessly (Step Functions), but it may be simpler to use traditional architecture.

- Stateful applications: Stateful apps that need to share local memory or disk space among various functions won’t function as efficiently using typical state-less serverlesss architectures. Yes, you can attach EFS to Lambda, but it’s never going to work exactly the same.

- Legacy Apps: Migrating legacy applications to a serverless architecture often involves substantial efforts and can inadvertently introduce unforeseen complexities. You can always leave the core of the legacy app on servers but build any new features using serverless.

- Super Low Latency Apps: There is some inherent latency added when making requests to serverless applications. This may be just 50ms, but if you need the minimum latency possible (aircraft telemetry) then it might be best to go with traditional infrastructure, even moving to on-premise to reduce the latency further.

So, while embracing serverless technologies might seem like the modern trend, it’s crucial to weigh these considerations. A thoughtful evaluation of your specific needs will ensure you make the best possible choice for your deployment environment. In the end, it’s about finding what works best for you – be that traditional servers or going serverless.

Alternatives to Serverless Architecture

There’s no denying that serverless architecture holds significant benefits, cutting down on both costs and operational efforts. However, it might not be the perfect solution for all scenarios. If you find yourself grappling with issues we’ve discussed, you might want to consider alternatives such as containers and virtual machines.

1: Containers

Containers have become a popular alternative as they offer a higher level of flexibility than serverless environments, but can retain some of the scalability benefits. One main advantage is their ability to run virtually anywhere – a capability that significantly reduces the risk of vendor lock-in. In layman’s term, containers allow you to package your software along with all its dependencies so it can run uniformly and consistently across different computing settings.

While container technology does require more management compared to serverless, it does provide explicit control over the operating environment. This means better compatibility for legacy apps and those designed using frameworks unsuitable for serverless setups.

You can also host containers in Fargate, minimising your management overhead whilst having the benefit of the more EC2-like billing model.

Moreover, you can provision your containers so that they scale automatically with your traffic. This won’t be as responsive as with Lambda, but will do a pretty good job with the everyday ebb and flow of traffic.

2: Virtual Machines

Virtual Machines (VMs), on the other hand, bring an even greater amount of control compared to containers while also ensuring isolation from other running processes—a feature paramount for secure operations. VMs essentially emulate an entire computer system complete with operating system (OS), hardware configurations, installed programs etc., making them best-equipped in terms of compatibility.

This absolute control comes at a cost though: administrative overhead. Operating VMs involves more housekeeping tasks such as monitoring resource usage levels and managing security patches for the OS—things developers don’t generally worry about when dealing with serverless infrastructure. However, this offers unparalleled customisation and control, which could be crucial for specialised operation requirements.

So, while serverless architecture indeed holds its charm, containers and Virtual Machines provide alternatives aligned closer with traditional computing models—offering greater control, decreased risk of vendor lock-in and solutions for potential cold start latency problems. The choice largely depends on your unique needs and the kind of flexibility and responsibility you’re willing to undertake in your development process.

Best Practices for Avoiding Serverless Issues

It’s crucial to understand that the perks of serverless architecture can also come with pitfalls. Invariably however, those can be mitigated by following best practices when creating your applications.

1: Use API Gateways as Security Buffers

API gateways play an integral part in managing and securing serverless architectures. They act as a protective shield against potential threats while offering eased access to specific endpoints within AWS or Lambda functions.

Using API gateways as security buffers helps by:

- Offering comprehensive threat protection and threat detection.

- Easily connecting to authorisation mechanisms (Cognito or Lambda Authorisers)

- Creating HTTP APIs that allow streamlined management and increased efficiency for workloads requiring public-facing APIs.

This efficient approach secures your serverless architecture while allowing room for innovation.

2: Data Separation and Secure Configurations

Data separation is vital in avoiding concerns related to serverless security. Segmenting data across multiple databases can reduce the risk of accidental breaches due to mismanagement.

In addition, secure configurations ensure these parts remain well-protected and function optimally. To achieve this:

- Perform regular auditing of configuration settings.

- Implement principle of least privilege (PoLP) – granting minimum possible privileges to each user.

By combining data separation with secure configurations, you significantly minimise data leak risks whilst maximise performance in your serverless architecture.

3: Minimize Privileges

Another facet involves minimising privileges – apply the principle of ‘least privilege’. This simply means giving the bare minimum permissions necessary for a task. It limits exposure should there be any malicious activity detected, thus reducing damage potential drastically. Crucially, it represents one possible counter-measure against the risk commonly known as ‘over privileged functions’.

Remember:

- Each function must only have access needed to perform its duty.

- Regularly review permissions for relevance.

- Take very careful not of functions (or users) who are able to make changes to IAM.

4: Separate Application Development Environments

A best practices method for enhancing security and performance in your serverless architecture is through the separation of application development environments. Splitting ‘development’, ‘staging’, and ‘production’ environments into separate AWS accounts is recommended.

This practice provides room for increased developer freedom without risking the production functionality and data. There’s no chance a junior developer accidentally deletes the production database, or a development function writes to the production database or sends emails to production users.

Having each environment in its own AWS account improves load testing you do in the staging environment, ensuring that the production application won’t encounter service limit issues. This robust structure minimises the risk of premature production rollouts, leading to an overall more secure and efficient working environment.

Achieving an effective serverless deployment can be a breeze when you know how to avoid common setbacks. By employing these industry-standard best practices, you stand a good chance dodging those well-known traps linked with using Serverless Architectures while enjoying all its benefits.

FAQ

Serverless or microservices—which is better?

Serverless and micro-services aren’t necessarily exclusive. In fact most of the clients I work with use a microservice approach, but deploy those microservices using Serverless. In traditional architecture, those microservices would each be a server, or cluster of servers, but breaking your application into microservices, and then using Serverless to build those microservices is a great approach.

Serverless or containers—which is better?

Many architects grapple with deciding between serverless and containers. However, the question isn’t about which approach is unequivocally “better.” Both figure prominently in the modern software development landscape and provide unique benefits. The choice largely depends on the context.

Serverless architecture, such as AWS Lambda, shines when you need to scale automatically and pay only for what you use. It’s fantastic for event-driven code that doesn’t require a continuous running state.

On the other hand, containers are excellent when you want fine-grained control over your components’ lifecycle. They can offer cost savings when used at large scale and can resolve some of the limitations of Serverless such as the 15 minute timeout.

Hence, I recommend evaluating the specific needs of your project before making a decision.

How do you tell whether a service is Serveless?

Identifying if a service qualifies as ‘serverless’ mostly revolves around one principle: does it abstract servers away? Typically,a serverless offering manages resource allocation dynamically so users don’t have to worry about infrastructure management.

Look for these characteristics:

- Automatic scaling: Does the service react to demand variations without manual intervention?

- Billing per usage: Are costs tied to exact consumption rather than provisioned capacity?

- No server administration: Is there zero requirement for system patching, maintenance or troubleshooting?

If all three conditions align, chances are high that it falls within serverless boundaries.

Can an entire application be run serverless?

Absolutely! An entire application can indeed run on serverless architecture. Many companies already harness this model to reduce operational complexities and embrace full scalability potential.

It involves piecing together managed services like API gateways, user authentication (Cognito), databases (DynamoDB), computing power (Lambda), and storage systems (S3). Real-time applications also incorporate additional services like AWS AppSync or WebSockets.

You can even host the frontend in Serverless. The most common approach is by hosting it in S3, using CloudFront to add local caching and then Route53 for the domain name.

A more advanced approach to frontend hosting is to use lambda to server-side render the website. This means you gather all of the data required for that page into the Lambda, generate the full view and send the completed page to the user, instead of having to make follow up requests (getUser, getBlogContent, etc) in the user’s browser.

However, remember that while entirely possible, going full serverless necessitates a thoughtful design to avoid pitfalls we’ve discussed in this post.

When should you not use serverless?

Despite its tremendous advantages, there are scenarios when using serverless may be counterproductive:

- Long-running tasks: Serverless has timeouts limits. AWS Lambda functions, for instance, cap at 15 minutes.

- Cold start latency: Sporadic usage patterns can lead to performance hit during cold starts.

- High compute requirements: Running hefty computational workloads constantly on serverless can rack up costs.

- Deeply connected systems: Implementations with numerous interdependencies might encounter subsequent problems when decomposed into multiple functions.

I advise carefully assessing your project’s architecture and unique requirements before rushing in blindly to embrace serverless technology.

Navigating the terrain of modern computing can feel fraught with complexity and potential missteps. Among these paths lies serverless architecture—a potent, innovative solution cloaked in a shroud of both excitement and apprehension. This guide provides a penetrating gaze into the depths of serverless technology, unveiling its treasures as well as its traps. Get set to explore what serverless means, when it’s truly advantageous, and how you might sidestep pitfalls lurking beneath its shiny surface.